|

Hi, I'm a Research Scientist at AIRoA, working on Vision-Language-Action (VLA) systems. I also work as a Cooperative Research Fellow at the Institute of Industrial Science, UTokyo.

E-mail: tohkawa [dot] contact [at] gmail [dot] com |

|

|

|

|

|

|

|

|

|

[Jan 2026] Received JSPS Ikushi Prize for my PhD study! |

|

I'm interested in computer vision, machine learning, and robotics for embodied AI, aiming to enable agents that can perceive, understand, and generate interactions in the real world. My research interest includes:

If you're interested in working with me, please visit my contact page and feel free to reach out! |

|

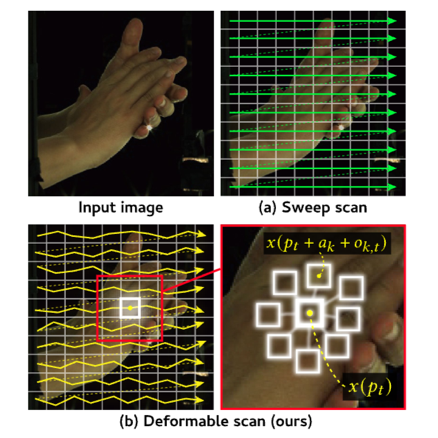

Yifan Zhou*, Takehiko Ohkawa*, Guwenxiao Zhou, Kanoko Goto, Takumi Hirose, Yusuke Sekikawa, and Nakamasa Inoue (*equal contribution) IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2026 HANDS, International Conference on Computer Vision Workshops (ICCVW), 2025 [Project] [Paper] We propose a novel and powerful framework for visual feature extraction in 3D hand pose estimation using recent state space modeling (i.e., Mamba), dubbed Deformable Mamba (DF-Mamba). DF-Mamba is designed to capture global context cues via Mamba's nature of selective state modeling and deformable state scanning. |

|

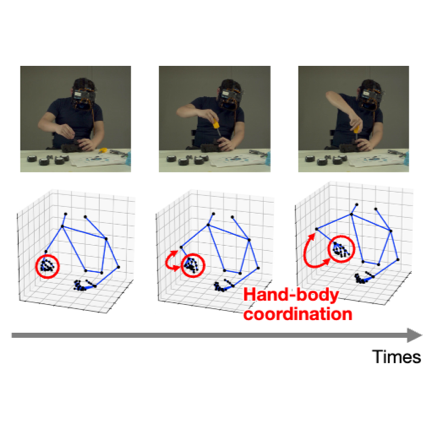

Tatsuro Banno, Takehiko Ohkawa, Ruicong Liu, Ryosuke Furuta, and Yoichi Sato HANDS, International Conference on Computer Vision Workshops (ICCVW), 2025 [Paper] We present AssemblyHands-X, the first markerless 3D hand-body benchmark for bimanual activities, designed to study the effect of hand-body coordination for action recognition. |

|

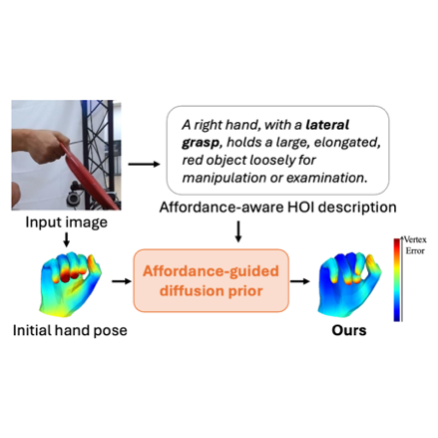

Naru Suzuki, Takehiko Ohkawa, Tatsuro Banno, Jihyun Lee, Ryosuke Furuta, and Yoichi Sato HANDS, International Conference on Computer Vision Workshops (ICCVW), 2025 [Paper] We propose a generative prior for hand pose refinement guided by affordance-aware textual descriptions of hand-object interactions. Our method employs a diffusion-based generative model that learns the distribution of plausible hand poses conditioned on affordance descriptions, which are inferred from VLMs. |

|

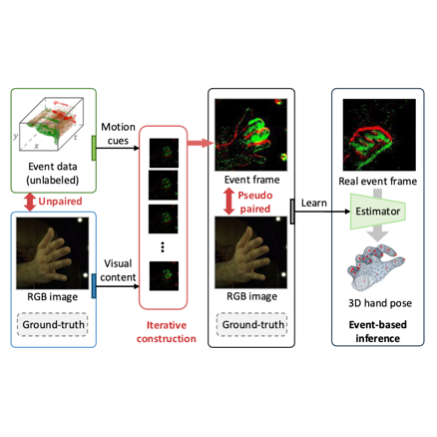

Ruicong Liu, Takehiko Ohkawa, Tze Ho Elden Tse, Mingfang Zhang, Angela Yao, and Yoichi Sato HANDS, International Conference on Computer Vision Workshops (ICCVW), 2025 [Paper] This paper presents RPEP, the first pre-training method for event-based 3D hand pose estimation using labeled RGB images and unpaired, unlabeled event data. |

|

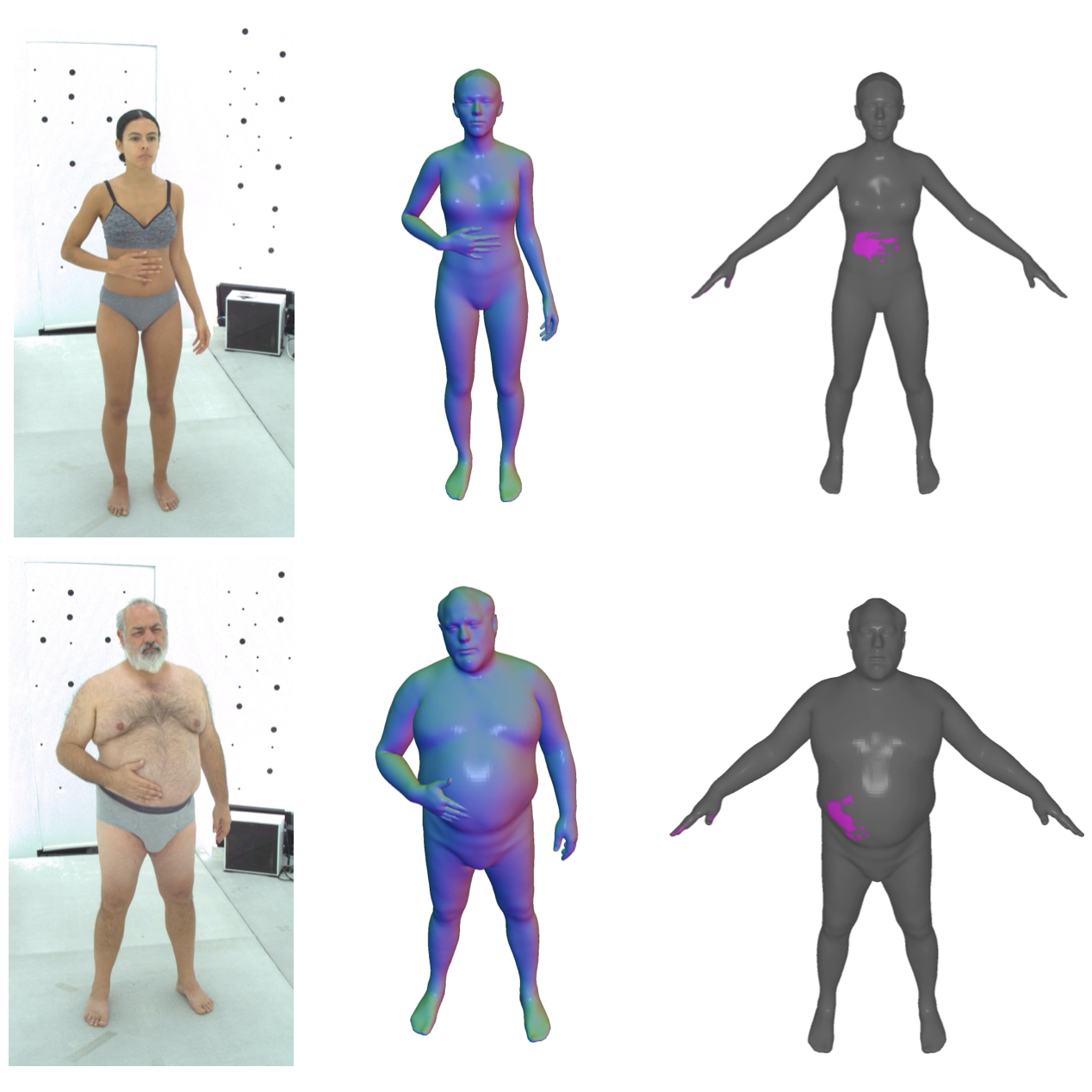

Takehiko Ohkawa, Jihyun Lee, Shunsuke Saito, Jason Saragih, Fabian Prado, Yichen Xu, Shoou-I Yu, Ryosuke Furuta, Yoichi Sato, and Takaaki Shiratori International Conference on Computer Vision (ICCV), 2025 I-HFM & HANDS, International Conference on Computer Vision Workshops (ICCVW), 2025 [Project] [Paper] [Poster] We introduce the first extensive self-contact dataset with precise body shape registration, Goliath-SC, consisting of 383K self-contact poses across 130 subjects. Using this dataset, we propose generative modeling of a self-contact prior conditioned by body shape parameters, based on a body-part-wise latent diffusion with self-attention. |

|

Takehiko Ohkawa Doctoral Dissertation, 2025 [Overview] [Paper] [Slides] |

|

Contributed as an organizer and challenge committee International Conference on Computer Vision Workshops (ICCVW), 2025 Past editions: [ECCV2024] / [ICCV2023] DetailsHosted challenges:AssemblyHands-S2D@ECCV2024: Dual-view hand pose AssemblyHands@ICCV2023: Single-view hand pose Our HANDS workshop will gather vision researchers working on perceiving hands performing actions, including 2D & 3D hand detection, segmentation, pose/shape estimation, tracking, etc. We will also cover related applications including gesture recognition, hand-object manipulation analysis, hand activity understanding, and interactive interfaces. |

|

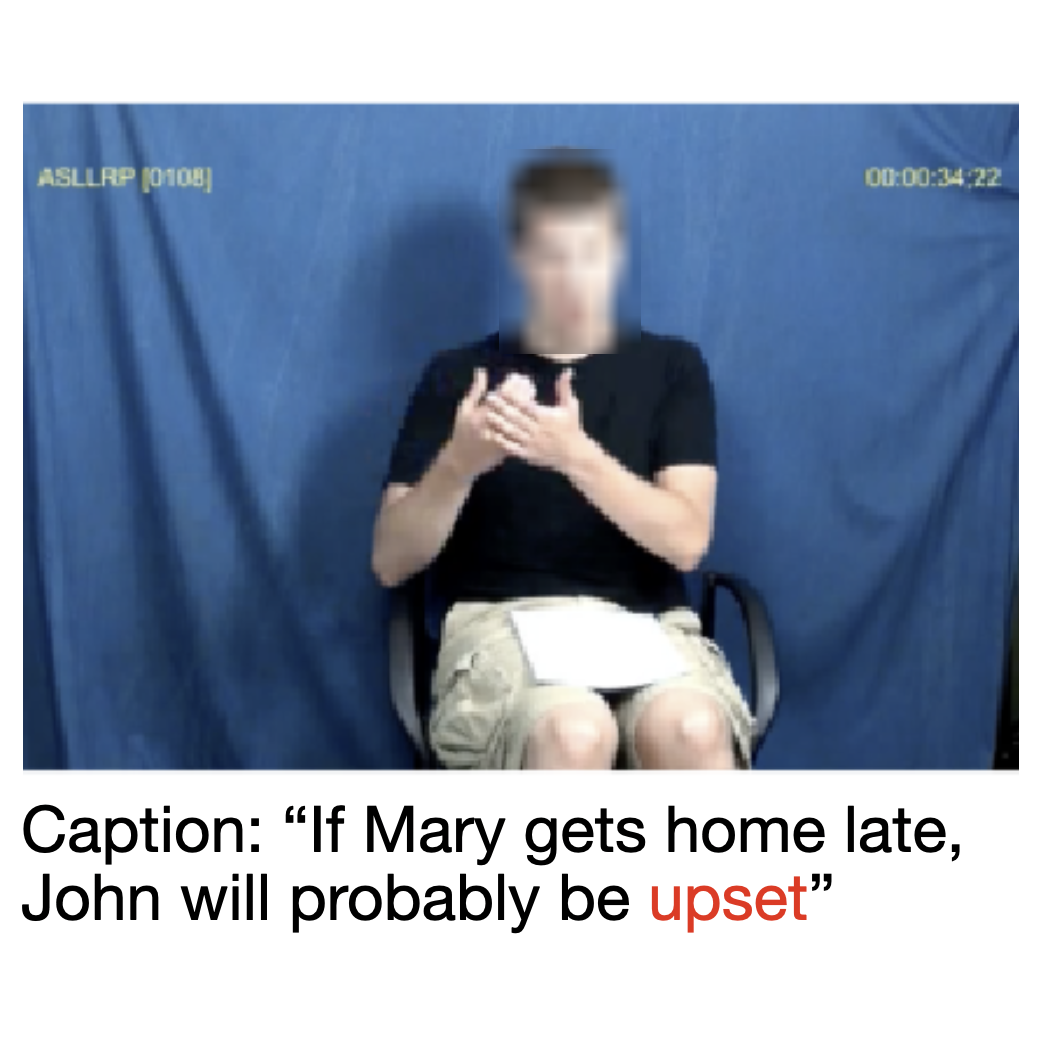

Phoebe Chua*, Cathy Mengying Fang*, Takehiko Ohkawa, Raja Kushalnagar, Suranga Nanayakkara, and Pattie Maes (*equal contribution) Preprint [Paper] We introduce EmoSign, the first sign video dataset containing sentiment and emotion labels for 200 American Sign Language (ASL) videos with open-ended descriptions of emotion cues. Alongside the annotations, we include baseline models for sentiment and emotion classification. |

|

Nie Lin*, Takehiko Ohkawa*, Yifei Huang, Mingfang Zhang, Minjie Cai, Ming Li, Ryosuke Furuta, and Yoichi Sato (*equal contribution) International Conference on Learning Representations (ICLR), 2025 HANDS, European Conference on Computer Vision Workshops (ECCVW), 2024 Invited Oral Presentation at Meeting on Image Recognition and Understanding (MIRU), 2025 [Project] [Paper] [Code] [Poster] We present a framework for pre-training of 3D hand pose estimation from in-the-wild hand images sharing with similar hand characteristics, dubbed SiMHand. |

|

Takehiko Ohkawa, Takuma Yagi, Taichi Nishimura, Ryosuke Furuta, Atsushi Hashimoto, Yoshitaka Ushiku, and Yoichi Sato IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025 LPVL, IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2024 [Project] [Paper] [Data & Code] [Video] We present EgoYC2, a novel benchmark for cross-view knowledge transfer of dense video captioning, adapting models from web instructional videos with exocentric views to an egocentric view. |

|

Zicong Fan*, Takehiko Ohkawa*, Linlin Yang*, Nie Lin, Zhishan Zhou, Shihao Zhou, Jiajun Liang, Zhong Gao, Xuanyang Zhang, Xue Zhang, Fei Li, Liu Zheng, Feng Lu, Karim Abou Zeid, Bastian Leibe, Jeongwan On, Seungryul Baek, Aditya Prakash, Saurabh Gupta, Kun He, Yoichi Sato, Otmar Hilliges, Hyung Jin Chang, and Angela Yao (*equal contribution) European Conference on Computer Vision (ECCV), 2024 [Paper] [Poster] We present a comprehensive summary of the HANDS23 challenge using the AssemblyHands and ARCTIC datasets. Based on the results of the top submitted methods and more recent baselines on the leaderboards, we perform a thorough analysis on 3D hand(-object) reconstruction tasks. |

|

Yilin Wen, Hao Pan, Takehiko Ohkawa, Lei Yang, Jia Pan, Yoichi Sato, Taku Komura, and Wenping Wang HANDS, European Conference on Computer Vision Workshops (ECCVW), 2024 [Paper] We present a novel framework that concurrently tackles hand action recognition and 3D future hand motion prediction. |

|

Ruicong Liu, Takehiko Ohkawa, Mingfang Zhang, Yoichi Sato IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 Invited Poster Presentation at EgoVis Workshop, CVPRW, 2024 Invited Oral Presentation at Forum on Information Technology (FIT), 2024 [Paper] [Code] We propose a novel Single-to-Dual-view adaptation (S2DHand) solution that adapts a pre-trained single-view estimator to dual views. |

|

Takehiko Ohkawa, Kun He, Fadime Sener, Tomas Hodan, Luan Tran, and Cem Keskin IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 EgoVis Distinguished Paper Award, CVPR, 2025 Invited Oral Presentation at Ego4D & EPIC Workshop, CVPRW, 2023 Poster Presentation at International Computer Vision Summer School (ICVSS), 2023 HANDS Workshop Benchmark Dataset, ICCVW, 2023 [Paper] [Project] [Code & Data] We present AssemblyHands, a large-scale benchmark dataset with accurate 3D hand pose annotations, to facilitate the study of challenging hand-object interactions from egocentric videos. |

|

Takehiko Ohkawa, Ryosuke Furuta, and Yoichi Sato International Journal of Computer Vision (IJCV), 2023 [Paper] [Springer] [Slides] We present a systematic review of 3D hand pose estimation from the perspective of efficient annotation and learning. 3D hand pose estimation has been an important research area owing to its potential to enable various applications, such as video understanding, AR/VR, and robotics. |

|

Takehiko Ohkawa, Yu-Jhe Li, Qichen Fu, Ryosuke Furuta, Kris M. Kitani, and Yoichi Sato European Conference on Computer Vision (ECCV), 2022 Invited Poster Presentation at HANDS and HBHA workshops, ECCVW, 2022 Invited Oral Presentation at Meeting on Image Recognition and Understanding (MIRU), 2023 [Paper] [Project] [Slides] We tackled domain adaptation of hand keypoint regression and hand segmentation to in-the-wild egocentric videos with new imaging conditions (e.g., Ego4D). |

|

Koya Tango, Takehiko Ohkawa, Ryosuke Furuta, and Yoichi Sato HANDS, European Conference on Computer Vision Workshops (ECCVW), 2022 [Paper] We propose Background Mixup augmentation that leverages data-mixing regularization for hand-object detection while avoiding unintended effect produced by naive Mixup. |

|

Takehiko Ohkawa, Takuma Yagi, Atsushi Hashimoto, Yoshitaka Ushiku, and Yoichi Sato IEEE Access, 2021 [Paper] [IEEE Xplore] [Project] [Code & Data] We developed a domain adaptation method for hand segmentation, consisting of appearance gap reduction by stylization and learning with pseudo-labels generated by network consensus. |

|

Takehiko Ohkawa, Naoto Inoue, Hirokatsu Kataoka, and Nakamasa Inoue International Conference on Pattern Recognition (ICPR), 2020 [Paper] We developed extended consistency regularization for stabilizing the training of image translation models using real, fake, and reconstructed samples. |

|

JSPS Ikushi Prize, 2026 |

|

ACT-X Travel Grant for International Research Meetings, 2024 |

|

Google Developer Groups AI for Science - Japan, Dec 2025. [Link (ja)][Slides] |

|

Professional Service:

Organization:

Conference Travel History:

|

|

© Takehiko Ohkawa / Design: jonbarron |