Generative Modeling of Shape-Dependent Self-Contact Human Poses

Takehiko Ohkawa1,2* Jihyun Lee1,3* Shunsuke Saito1 Jason Saragih1 Fabian Prada1 Yichen Xu1 Shoou-I Yu1 Ryosuke Furuta2 Yoichi Sato2 Takaaki Shiratori1

1Codec Avatars Lab, Meta

2The University of Tokyo

3KAIST

*Work done during the internship at Meta

IEEE/CVF International Conference on Computer Vision (ICCV), 2025

Abstract

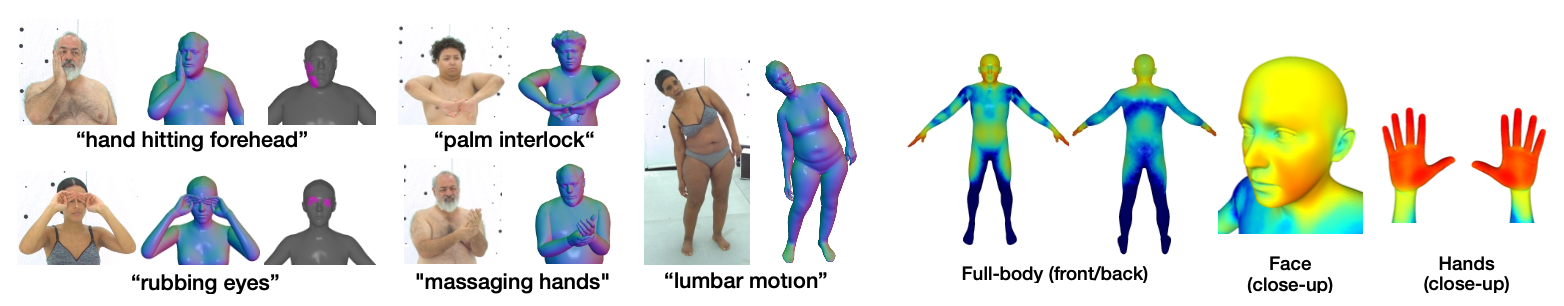

One can hardly model self-contact of human poses without considering underlying body shapes. For example, the pose of rubbing a belly for a person with a low BMI leads to penetration of the hand into the belly for a person with a high BMI. Despite its relevance, existing self-contact datasets lack the variety of self-contact poses and precise body shapes, limiting conclusive analysis between self-contact poses and shapes. To address this, we begin by introducing the first extensive self-contact dataset with precise body shape registration, Goliath-SC, consisting of 383K self-contact poses across 130 subjects. Using this dataset, we propose generative modeling of self-contact prior conditioned by body shape parameters, based on a body-part-wise latent diffusion with self-attention. We further incorporate this prior into single-view human pose estimation while refining estimated poses to be in contact. Our experiments suggest that shape conditioning is vital to the successful modeling of self-contact pose distribution, hence improving single-view pose estimation in self-contact.

Goliath-SC Dataset

We offer the first extensive self-contact dataset with varying full-body poses and precise body shape registration, dubbed Goliath-SC. Our self-contact dataset contains the largest amount of self-contact poses, 383K poses from 130 subjects. It further provides accurate full-body mesh registration based on 3D scans in a multi-camera dome (Goliath), which are converted to SMPL-X to access shape parameters. The scope of captured activities lies in natural self-contacts occurring in daily life like touching face, body, hands, etc. It also serves to identify limitations of recent pose estimators including foundation models on the self-contact data.

Shape-Dependent Self-Contact Pose Modeling with Diffusion Models

We investigate generative prior modeling of full-body poses in self-contact without relying on images. Our approach involves a new insight of body-shape-dependency regarding the subject's physical identity, such as (i) skeleton differences in height, limb length, etc., and (ii) surface differences caused by body fat and muscle distribution like BMI. Unlike joint distribution modeling between pose and shape with 3D human models such as SMPL and MANO, we aim to model the shape-dependent manifold of self-contact poses. Our proposed model follows a shape-conditional generative model with diffusion process. Specifically, we develop a latent diffusion model with self-attention, termed PAPoseDiff, which considers the relationship among highly interacting body parts (e.g., hands, body, and face). We leverage the learned diffusion prior to refine 3D poses in self-contact. Given initial SMPL-X estimation, we refine the poses to have a smaller error to the 2D keypoint observation, while maintaining the plausibility in contact acquired by the former generative training.

Results: Shape-Conditional Pose Generation

The video below shows our qualitative results with shape interpolation. When changing the body shapes (from a large to a slim body), the generated poses continuously move on the hand surface while preserving plausible self-contact poses. This indicates that our diffusion model can learn a smooth manifold of self-contact poses with respect to body shape changes.

Results: Single-View Pose Refinement

The figure below shows qualitative results of our refinement. We find that the initial predictions include ambiguities regarding contact and depth estimation, i.e., interacting parts are not in contact, and high-depth errors remain for hands. Our method can correct such failures without requiring the knowledge of where to contact. We also test our method in the wild. We observe the pseudo-GTs generated by 2D fitting may not be able to handle such self-contact scenes well; thus our method can be leveraged for the pseudo-GTs registration for self-contacts of in-the-wild domains.

|

© Takehiko Ohkawa |