Visual Understanding of Human Hands in Interactions

Takehiko Ohkawa

The University of Tokyo

Doctoral Dissertation, 2025

Abstract

Human hand interactions are central to daily activities and communication, providing informative signals for human action, expression, and intent. Visual perception and modeling of hands from images and videos are therefore crucial for various applications, including understanding human interactions in the wild, virtual human modeling in 3D, human augmentation through assistive vision systems, and highly dexterous robotic manipulation.

While computer vision has evolved to estimate hand states ranging from coarse detection to nuanced 3D pose and shape estimation, existing methods fall short in three key challenges. First, they struggle to handle complicated and fine-grained contact scenarios, such as grasping objects (i.e., hand-object contact) or touching one's own body (i.e., self-contact), where occlusions and deformations introduce substantial ambiguity. Second, most models generalize poorly to dynamic and real-world environments due to the domain gap between studio-collected training datasets and in-the-wild testing conditions. Third, beyond geometric estimation, current approaches often lack the ability to link low-level hand states to high-level semantic comprehension, e.g., with action labels or language.

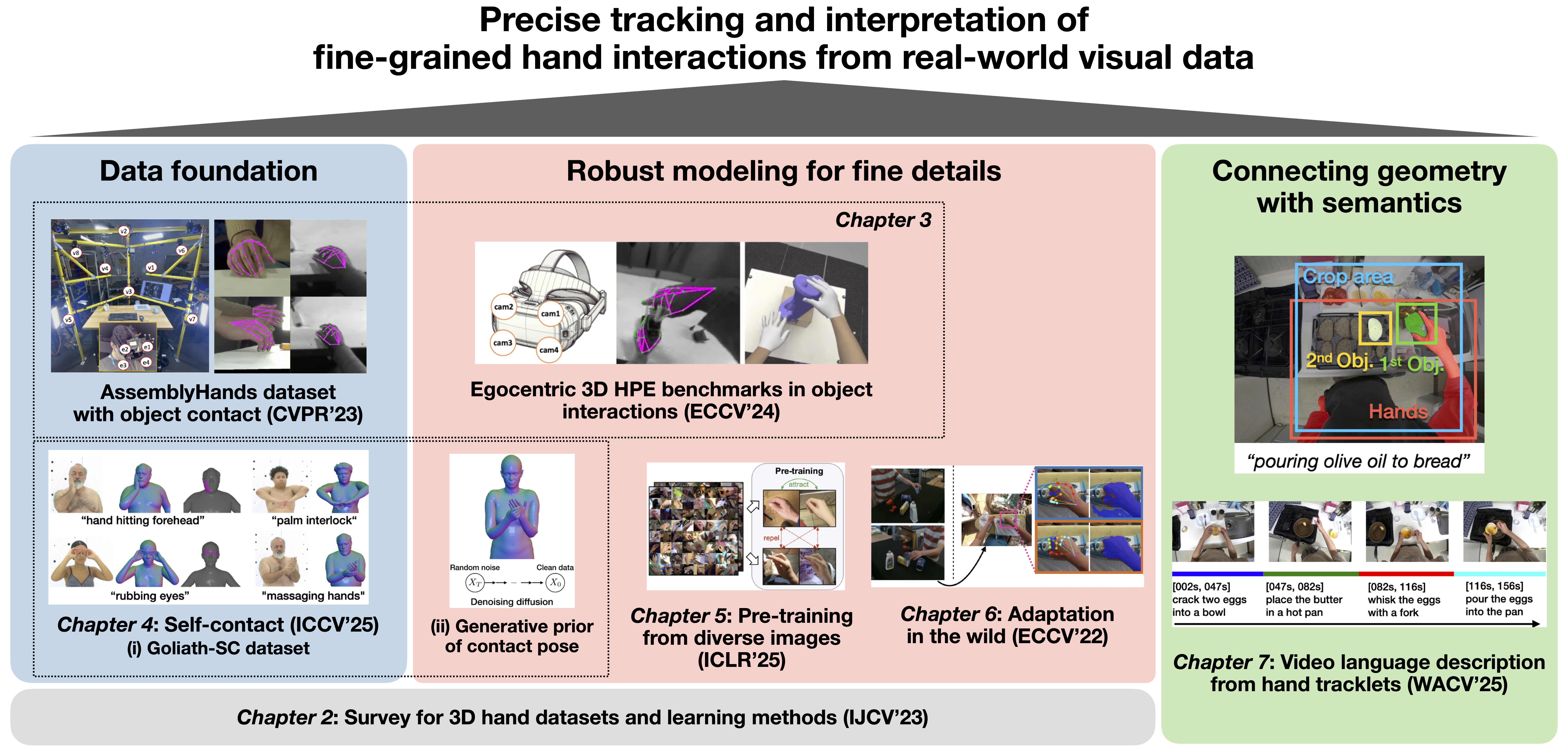

This dissertation addresses these limitations by pursuing the goal of precise tracking and interpretation of fine-grained hand interactions from real-world visual data. To achieve this, the dissertation systematically proposes three key pillars:

- Data foundation: Building diverse and high-quality data infrastructure to enable learning fine-grained hand interactions featuring challenging contact scenarios.

- Robust modeling for fine details: Achieving robust and reliable estimation for fine-grained hand interactions by generalizing and adapting machine learning models, making them resilient to occlusion, noise, and domain shift in in-the-wild scenarios.

- Connecting geometry and semantics: Bridging captured geometric information with semantics to comprehend actions and intentions based on tracked hand states.

The first pillar of this research focuses on building a diverse and high-quality data foundation. This involves investigating and capturing hand interaction datasets that include complex contacts, such as object interaction and self-contact, using multi-camera systems. This approach enables high-precision 3D pose and shape annotations for intricate hand contact, while offering valuable assets to the community.

The second pillar is dedicated to robust modeling to capture fine details. Leveraging the constructed datasets, we develop advanced machine learning methods for generalizable and adaptable estimation in the wild. This includes comprehensive analysis of 3D hand pose estimation tasks during object contact, building model priors from diverse image or pose data for downstream tasks in pose estimation, and proposing adaptation methods to further bridge performance gaps across different recording environments and camera settings.

The third pillar centers on connecting the captured low-level geometric information with high-level semantic understanding. This involves utilizing the predictions for hand geometry in 2D or 3D (e.g., detection, segmentation, and pose) to comprehend the semantics of the interaction. We explore natural language descriptions as semantic signals and propose generating dense video captions from the hand-object tracklets.

Collectively, these three pillars present a consistent and integrated framework to advance the visual understanding of hands in interactions. By combining advanced techniques in data foundation, robust modeling, and semantic understanding, this dissertation contributes to foundational technologies and intelligent systems for human-centric interactions, with broader applications and implications in computer vision.

Publications

Note that * indicates co-first authors.

- T. Ohkawa, R. Furuta, and Y. Sato. Efficient annotation and learning for 3D hand pose estimation: A survey. International Journal on Computer Vision (IJCV), 131:3193-3206, 2023.

- Z. Fan*, T. Ohkawa*, L. Yang*, (20 authors), and A. Yao. Benchmarks and challenges in pose estimation for egocentric hand interactions with objects. In Proceedings of the European Conference on Computer Vision (ECCV), pages 428-448, 2024.

- T. Ohkawa, J. Lee, S. Saito, J. Saragih, F. Prada, Y. Xu, S. Yu, R. Furuta, Y. Sato, and T. Shiratori. Generative modeling of shape-dependent self-contact human poses. To appear in IEEE/CVF International Conference on Computer Vision (ICCV), 2025.

- N. Lin*, T. Ohkawa*, M. Zhang, Y. Huang, M Cai, M. Li, R. Furuta, and Y. Sato. SiMHand: Mining similar hands for large-scale 3D hand pose pre-training. In Proceedings of the International Conference on Learning Representations (ICLR), 2025.

- T. Ohkawa, Y.-J. Li, Q. Fu, R. Furuta, K. M. Kitani, and Y. Sato. Domain adaptive hand keypoint and pixel localization in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), pages 68-87, 2022.

- T. Ohkawa, T. Yagi, T. Nishimura, R. Furuta, A. Hashimoto, Y. Ushiku, and Y. Sato. Exo2EgoDVC: Dense video captioning of egocentric procedural activities using web instructional videos. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025.

Related Publications

- T. Ohkawa, K. He, F. Sener, T. Hodan, L. Tran, and C. Keskin. AssemblyHands: Towards egocentric activity understanding via 3D hand pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 12999-13008, 2023. EgoVis Distinguished Paper Award at CVPR 2025.

- R. Liu, T. Ohkawa, M. Zhang, and Y. Sato. Single-to-dual-view adaptation for egocentric 3D hand pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 677-686, 2024.

- T. Ohkawa, T. Yagi, A. Hashimoto, Y. Ushiku, and Y. Sato. Foreground-aware stylization and consensus pseudo-labeling for domain adaptation of first-person hand segmentation. IEEE Access, 9:94644-94655, 2021.

Slides

Citation

@phdthesis{ohkawa:phd2025,

title = {Visual Understanding of Human Hands in Interactions},

author = {Takehiko Ohkawa},

school = {The University of Tokyo},

year = {2025}

}

|

© Takehiko Ohkawa |